LATEST ARTICLES

Video trends · 10 min read

Behind the scenes of video production at api.video — A tête-a-tête with our video editor

Go behind the scenes with our video editor at api.video! Discover the ins and outs of video production, from creative planning to final cut, and learn what it takes to bring great content to life.

Multiple authors · November 12, 2024

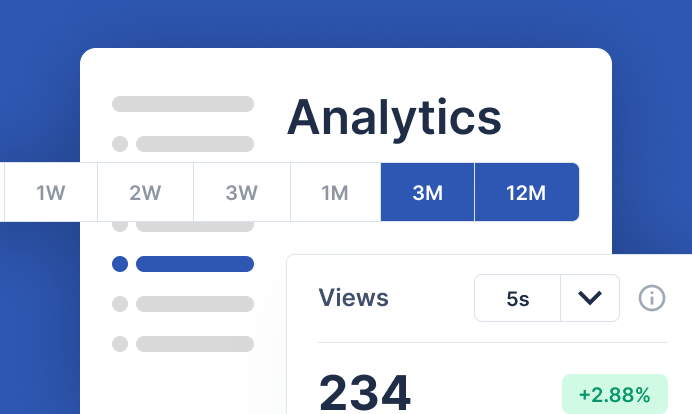

Product updates · 3 min read

An upgraded Analytics is here: Deeper insights, better tracking

Discover our biggest Analytics upgrade yet! Check out expanded metrics, new dimensions, and more.

Arushi Gupta · November 6, 2024

Video trends · 8 min read

Token-based authentication: The key to safer API interactions

Learn how token-based authentication in web APIs secures sensitive data and simplifies access control.

api.video · October 28, 2024

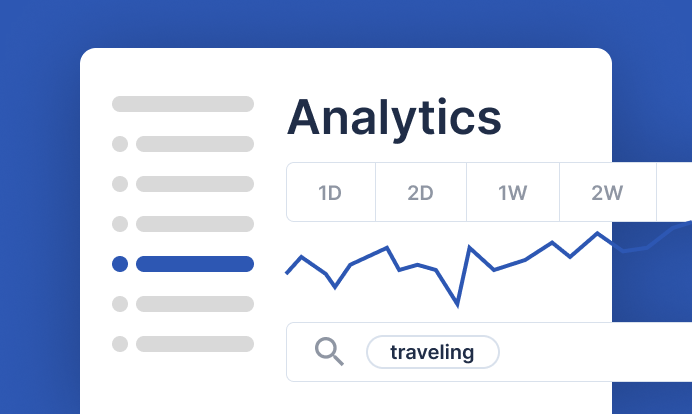

Video trends · 4 min read

How we use Analytics at api.video to optimize our video content — and you can too!

Learn practical tips and techniques to optimize your own video content with Analytics as we share the approach we use in-house.

Multiple authors · October 18, 2024

Product updates · 3 min read

Introducing AI video summarization: Derive key moments from hours of content

Discover how our AI video summarization can instantly extract key moments from hours of content, saving you time and boosting productivity.

Cédric Montet · October 23, 2024

Product updates · 3 min read

The wait is over: AI video transcription officially launches today

Learn about our latest AI transcription feature, designed to make your videos more accessible, searchable, and engaging.

Cédric Montet · October 15, 2024

Video trends · 9 min read

Closed captions Vs. subtitles, what is the difference?

Discover the key differences between closed captions and subtitles. Learn how they're created, when to use each, and their impact on accessibility and user experience.

Arushi Gupta · October 3, 2024

Video trends · 7 min read

MKV vs. MP4: Understanding the differences

Explore the differences between MKV and MP4 in this detailed guide. Learn which format is ideal for streaming and the shortcomings of each.

Arushi Gupta · September 24, 2024

Tutorials · 8 min read

What is a webhook and how to use webhooks for real-time video management

Answer critical questions like what are webhooks, how they work, and how you can use them for video management.

api.video · September 12, 2024

Product updates · 4 min read

Introducing Video Restore: Insure your videos against accidental deletion

With the Video Restore feature, now you can easily recover accidentally deleted videos in just a few clicks.

api.video · September 19, 2024