Tutorials · 10 min read

Building record.a.video part 3: the MediaRecorder API

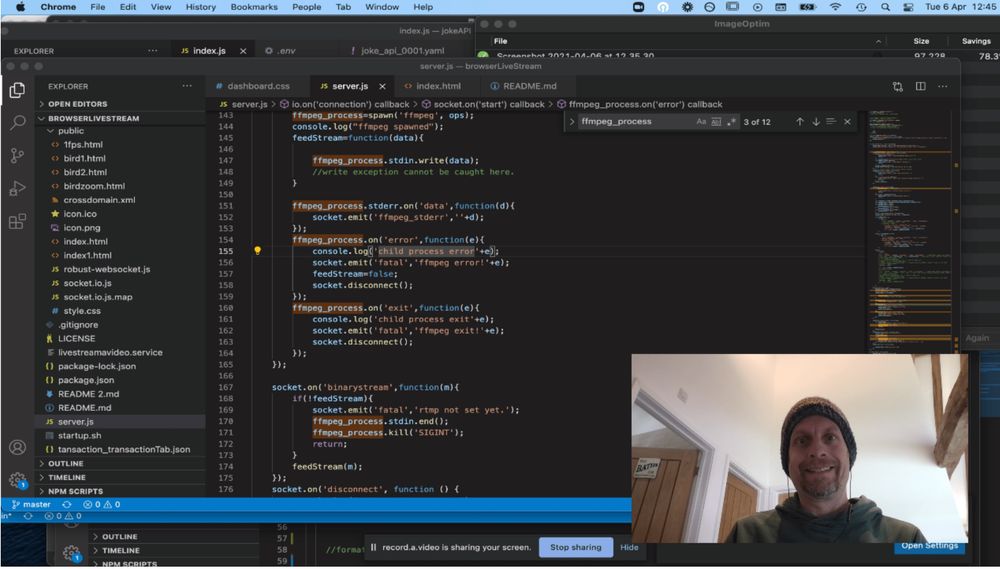

As we continue our series on creating record.a.video, I'll discuss the MediaRecorder API, where I create a video stream from the browser canvas to create the output video. This output stream then feeds into the video upload (for video on demand playback) or the live stream (for immediate and live playback).

Doug Sillars

April 7, 2021

We’ve just released record.a.video, a web application that lets you record and share videos. If that were not enough, you can also livestream. The page works in Chrome, Edge, Firefox, Safari (14 and up), and on Android devices. This means that the application will work for about 75% of people using the web today. That’s not great, but since there are several new(ish) APIs in the application, it also isn’t that bad!

This is part 3 of the continuing series of interesting webAPIs that I used to build the application.

-

In post 1, I talked about the getUserMedia API to record the user's camera and microphone.

-

In post 2, I discussed recording the screen, using the Screen Capture API.

Using the video streams created in posts 1 & 2, I draw the combined video on a canvas.

-

In this post, I'll discuss the MediaRecorder API, where I create a video stream from the canvas to create the output video. This output stream feeds into the video upload (for video on demand playback) or the live stream (for immediate and live playback).

-

In post 4, I'll discuss the Web Speech API. This API converts audio into text in near real time, allowing to create 'instant' captions for any video being created in the app. This is an experimental API that only works in Chrome, but was so neat, I included it in record.a.video anyway.

Introduction

In the first two posts, we walked through obtaining video streams from the camera and screen, and composed them on a canvas. Now, we want to take the composed video from the canvas and record it. To do this recording, we'll use the MediaRecorder API.

The MediaRecorder API

Now that we have our composed video on the canvas, the next step is to create a video stream of the canvas and send it to the MediaRecorder.

First, we create a stream, and set it to record the canvas at 25 frames per second:

stream = canvas.captureStream(25);

This stream is silent, as all the video fed into the canvas had no audio (this prevents feedback loops between the app, mic and speakers), so we create an audio stream from the mic, and then we add the audio track to the stream:

audioStreamOptions= {

mimeType: "video/webm;codecs=vp8,opus",

// mimeType: "video/mp4",

audio: {

deviceId: micId}

};

//grab the audio and add it to the stream coming from the canvas

audioStream = await navigator.mediaDevices.getUserMedia(audioStreamOptions);

for (const track of audioStream.getTracks()) {

console.log("adding audio track");

stream.addTrack(track);

console.log("stream added audio", stream);

}

You might notice that there was experimentation on MIME types for the audio stream. This will be a recurring theme in this post.

Now that we have a stream with our video and audio, we are ready to create a MediaRecorder instance to ... well ... record the media.

Creating the MediaRecorder

In researching how to build with the MediaRecorder, I found this great post by Sam Dutton from Google. He recommended building the MediaRecorder based on the MIMEtype supported by the browser, which seemed like a good idea:

var options;

if (MediaRecorder.isTypeSupported('video/webm;codecs=vp9')) {

options = {mimeType: 'video/webm; codecs=vp9'};

} else if (MediaRecorder.isTypeSupported('video/webm;codecs=vp8')) {

options = {mimeType: 'video/webm; codecs=vp8'};

} else {

// ...

}

mediaRecorder = new MediaRecorder(stream, options);

This ended up being a headache that I'll discuss in the browser compatibility section. I ended up just letting the browser decide the MIME type.

Here is my startRecording function. There's a lot of logging going on here (and the reason will be clear when I discuss the issues that arised).

function startRecording() {

//if I omit the MIMEtype, MediaRecorder works in Safari 14.0.3. If I add a Mime.... it fails.

//i had a mimetype in the options and it would not record properly.

var options = { audioBitsPerSecond: 100000, videoBitsPerSecond: 4000000};

//var options = 'video/mp4';

recordedBlobs = [];

try {

mediaRecorder = new MediaRecorder(stream, options);

console.log("options", options);

console.log("mediaRecorder mime", mediaRecorder.mimeType);

} catch (e0) {

console.log('Unable to create MediaRecorder with options Object: ', options, e0);

try {

options = {mimeType: 'video/webm;codecs=vp8,opus', bitsPerSecond: 100000};

mediaRecorder = new MediaRecorder(stream, options);

console.log("options", options);

} catch (e1) {

console.log('Unable to create MediaRecorder with options Object: ', options, e1);

try {

options = 'video/mp4';

mediaRecorder = new MediaRecorder(stream, options);

console.log("options", options);

} catch (e2) {

alert('MediaRecorder is not supported by this browser.');

console.log('Unable to create MediaRecorder with options Object: ', options, e1);

console.error('Exception while creating MediaRecorder:', e2);

return;

}

}

}

console.log('Created video MediaRecorder', mediaRecorder, 'with options', options);

console.log(",ediacrecorder stream info", mediaRecorder.stream);

console.log(",ediacrecorder stream trackinfo", mediaRecorder.stream.getTracks());

mediaRecorder.onstop = handleStop;

if(live){

console.log("mime", mediaRecorder.mimeType);

socket.emit("config_vcodec", mediaRecorder.mimeType);

mediaRecorder.ondataavailable = function(e) {

// console.log("e", e.data);

socket.emit("binarystream",e.data);

state="start";

//chunks.push(e.data);

}

document.getElementById("video-information").innerHTML = "Live Stream available after 20s <a href="+live_url+">here</a>";

}

else{

//if recording save to blob

console.log("saving blob");

//mediaRecorder.ondataavailable = handleDataAvailable;

mediaRecorder.ondataavailable = function handleDataAvailable(event) {

console.log("data-available");

if (event.data && event.data.size > 0) {

console.log("event.data", event.data);

const blobby = new Blob([event.data], {type: mediaRecorder.mimeType});

console.log("blobby", blobby);

recordedBlobs.push(blobby);

console.log(recordedBlobs);

console.log("handledataavailable", recordedBlobs.length);

}

}

}

mediaRecorder.start(10); // collect 10ms of data

console.log('MediaRecorder started', mediaRecorder);

}

Breaking this down - we create a MediaRecorder with the browser choosing the MIME (the try/catch calls for vp8 and mp4 remain, but are not used, since the in the first try, we let the browser choose on it's own).

If the record.a.video session is a livestream - the MediaRecorder sends the video to the socket, and the socket sends the video to a Node server, and to the api.video livestream.

If the session is a recording for video on demand, when data available is ready, it is written into a blob. These blobs are combined into an array, and uploaded when the recording is completed.

There's a lot of logging to console here, so let me describe what I discovered during my testing.

testing the MediaRecorder

Chrome & Edge

I was running most of my tests in Chrome. Chrome supports VP9, and in my initial testing, I was using the Google approach of forcing the MIME type (VP9 -> VP8 -> other) to the MediaRecorder. With Chrome and Edge, this worked perfectly with VP9, and both live and VOD recordings worked as I expected it to. Edge, being based on Chromium also worked just fine in my testing here.

Firefox

The Firefox testing also was fine. It was the first browser to use the try/catch, as FF does not support VP9, and so it created the MediaStream with the VP8 MIMEtype. I had issues adding the audio track until I added opus to the mimetype, and then the MediaRecorder worked as expected to create a recording or to stream from Firefox.

Safari

I spent about a day trying to figure out what was going on with Safari. The MediaRecorder API only became supported in Safari 14, so it is a new addition to the browser, and I had to shake the APi a bit to make it work the way I wanted it to.

I started testing with Safari 14.0.2.

When I forced the MIME type choice (like in the Google article above), Safari chose to use the VP9 MIMEtype (or at least it did not fail the try, and ignored the VP9). The video recorded fine, but once it was uploaded to api.video, the video was a static image with the audio playing as expected. That was sort of odd.

If I removed the stream.addTrack(track); adding the audio to the stream that fed into the MediaRecorder, I would get a silent video, but the video had motion.

My initial take was that there was a problem with the stream feeding the video into the MediaRecorder, where it was only freading one track for some reason - so I began debugging there. Each of my tests was 10-15 seconds of me recording my screen and saying "test test test" a bunch of times.

None of the recordings worked correctly on upload to api.video. I logged into the backend to try to figure out if perhaps there was a bug on the transcoding at api.video. With some help from the dev team, I noticed a few interesting things:

These 5-10s videos were showing up in our system as having durations between 16-48 hours. That was unexpected. :D

Looking at the ffprobe of one of these videos, there are several issues:

[STREAM]

index=0

codec_name=h264

codec_long_name=H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10

profile=High

codec_type=video

codec_time_base=107/2640

codec_tag_string=avc1

codec_tag=0x31637661

width=1280

height=720

<snip>

start_pts=101581627

start_time=169302.711667

duration_ts=101583621

<snip>

First off, this video should have had the VP9 webm MIMEtype, but it appears to be h264 mp4. Hmm.....

Second,the start time is 101581627, and the duration is 101583621. Neither make sense in UNIX time (in milliseconds since 1970 - these dates are still disco-era), and the difference (~1.9s) is shorter than the actual ~3s long clip.

However, our API takes the duration literally, and 101583621ms ~ 47 hours. Needless to say, running just a few tests over 2 days, my account saw "192,000 minutes" (that's ~130 days!) of video uploaded. Needless to say, I was one of the top accounts that day (in reality, my videos were all <10s).

So, I have no idea what was going on - so I began writing to the console. And I found that each blob was indeed being written as mp4, despite the MediaRecorder option specifying VP9. Playing and tweaking with MIMEtypes everywhere did not help.

I was testing in Safari 14.0.2, so I updated my MacOS, and was upgraded to Safari 14.0.3. In 14.0.3, the MediaRecorder failed to acknowledge any MIMEtypes, and I got the alert from my code:

alert('MediaRecorder is not supported by this browser.');

Now, I know that Safari 14 supports MediaREcorder. But my code was looking for MIMEtype acceptance, and Safari was not accepting VP9, VP8 or mp4 as acceptable MIMEtypes for the video recording. Finally, I threw my hands in the air and removed the MIMEtype option from the MediaRecorder creation, and... it all worked. And it worked in all browsers.

So, the MediaRecorder in Safari 14.0.2 was (and still may be) doing weird things for the video upload:

- It would only support one track - either audio with a still image, or if I removed the audio, a silent video

- Funky duration on the videos created - short (under 15s long) videos were reporting as having durations of many many hours.

Of course, I've upgraded my Mac, so I cannot go back to test to see if removing the MIMEtype requirement will fix Safari 14.0.2.

In Safari 14.0.3, the MIMEtype option is not working, and leads to the MediaRecorder to not record the video.

Conclusion

Capturing the video from a canvas and recording it with the MediaRecorder API proved to be a bit of a challenge. But, I got it working, and as a result, I learned a lot about how all of the video streams and recording work in different browsers (especially Safari).

The end result is exactly what I wanted: a cross browser tool to record videos from your camera and screen, and make them easy to share.

In the last post, we'll explore the Speech recognition API, and how that can be used to create video captions in real time.

If you haven't done so yet, you can create your free api.video account following this link and as always, if you have any questions about this tutorial or would like to share you experience, please join our community forum.

YOU MIGHT ALSO LIKE

LATEST ARTICLES

Tutorials · 8 min read

What is a webhook and how to use webhooks for real-time video management

Answer critical questions like what are webhooks, how they work, and how you can use them for video management.

api.video · September 12, 2024

Tutorials · 4 min read

How to build AI facial expression detection into your videos with React

Learn how to add AI facial expression detection to your videos using one of Javascript's powerful libraries, React.

Yohann Martzolff · June 26, 2024

Tutorials · 4 min read

How to integrate AI facial expression detection to control your video – Javascript

Learn how to add AI facial expression detection to your videos using Javascript.

Yohann Martzolff · June 25, 2024

Try out more than 80 features for free

Access all the features for as long as you need.

No commitment or credit card required

Video API, simplified

Fully customizable API to manage everything video. From encoding to delivery, in minutes.

Video API, simplified

Fully customizable API to manage everything video. From encoding to delivery, in minutes.

Built for Speed

The fastest video encoding platform. Serve your users globally with 140+ points of presence.

Built for Speed

The fastest video encoding platform. Serve your users globally with 140+ points of presence.

Let end-users upload videos

Finally, an API that allows your end-users to upload videos and start live streams in a few clicks.

Let end-users upload videos

Finally, an API that allows your end-users to upload videos and start live streams in a few clicks.

Affordable

Volume discounts and usage-based pricing to ensure you don’t exceed your budget.

Affordable

Volume discounts and usage-based pricing to ensure you don’t exceed your budget.