Video trends · 17 min read

The future of [ultra] low-latency video streaming

A technical overview of four popular ultra low latency protocols for live streaming video, presented by Anthony Dantard.

Anthony Dantard

November 9, 2021

Customers are "divinely discontent" and their expectations are constantly on the rise. Thanks to services like YouTube & Netflix, we all expect blazingly fast load times and smooth video playback for streaming on-demand video. What might be less obvious is that, whether we're aware of it or not, that expectation is slowly transferring to real-time video communication and live streaming applications as well.

At api.video, we're obsessed with delivering the best developer and viewer experience for our customers. So it's no surprise that we spend a lot of time thinking about and keeping up-to-date on video protocol development. Each video protocol has its strengths and weaknesses. Each is better suited for different applications.

In this post, we outline four of the major low-latency protocols, talk about the pros and cons of each, and give our commentary on where we think the future of low latency streaming is going. We'll talk about the following protocols in depth in this post:

- WebRTC

- LL-HLS

- LL-DASH

- HESP

WebRTC

The WebRTC protocol supports both audio and video streams for real-time bi-directional communication. It can be used for ingestion and distribution with an end-to-end latency between 300ms - 600ms depending on network quality and the distance between users. The protocol really took off in 2011 when Google bought Global IP Solutions, the company that originally developed the protocol, and gave the project significantly more human and financial resources.

Ten years later, WebRTC has become the standard for real-time video communication on the web. The components that WebRTC is based on are accessible via a JavaScript API maintained by the W3C and the IETF, allowing users to live stream directly to a web browser without installation of any third-party tool.

In theory, WebRTC allows the establishment of a video conference system between 2 browsers by connecting them to each other peer-to-peer. However, in practice the architecture of computer networks (internet, corporate, and domestic) can greatly complicate matters.

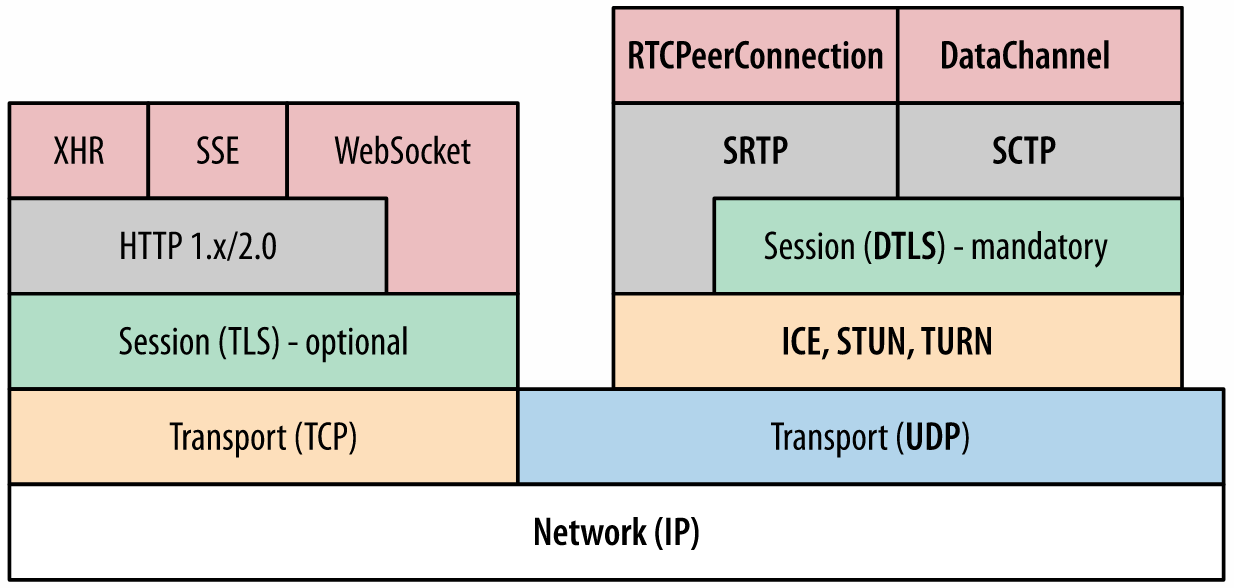

Diagram of the WebRTC stack

Finding your way through the internet labyrinth

In some cases, we are not directly connected to the internet and we are behind several network layers such as a NAT, a firewall, or a proxy. To get around these problems, WebRTC allows you to use the ICE (Interactive Connectivity Establishment) protocol. It allows you to find the most direct path to make two machines communicate and cross the different network layers. For this, a STUN / TURN server is necessary in order to obtain the external address of a user and relay the traffic if a direct connection is not possible.

The WebRTC protocol requires, in addition to its own multimedia server infrastructure, a STUN / TURN server infrastructure to operate communications in a production environment with real massive use.

To further confuse the issue

The protocol specification requires that all data exchanged between peers must be encrypted (video, audio, data application). Thus, the protocol embeds several security protocols to fill the gaps of the UDP protocol when it's used.

WebRTC uses SDP (Session Description Protocol) to expose some properties of the peer-to-peer connection like the types of media to be exchanged and their associated codecs, the network transports, and some bandwidth information.

Respectively DTLS (Datagram Transport Layer Security) is involved to generate Self-Signed certificates, for the negotiation of the secrets used for encrypting media data, and for secure transport of application data between peers. SRTP (Secure Real-Time Transport) is used for the transport of video and audio streams. The last security protocol involved is SCTP (Stream Control Transport Protocol), which is used for the exchange of application data.

The challenges of WebRTC scalability

Several network architectures and topologies can be implemented to operate communications with the WebRTC protocol, each responding to a specific use case, with advantages and drawbacks specific to each topology. We will not dig into topology details in this article, but be aware that SFU (Selective Forwarding Unit) is perhaps the most popular architecture in modern WebRTC applications today.

The main challenge with this protocol is the scalability on a planetary scale that is cost effective. We are not saying that WebRTC can't be scaled worldwide, it was true a few years ago at its early stage but it's not true anymore thanks to the great improvements and work bring by some great companies to improve topology.

As WebRTC is a mashup of several protocols that each have to be learned and mastered by engineers rendering it very complex. It's difficult for most companies to implement it in their infrastructure at a global scale in a cost effective way. That's why only a few companies have succeeded at making it fully scalable today. They do so by mixing SFU and MCU (MultiPoint Conferencing Unit) topologies, then putting it on the edge.

Low Latency HTTP ABR streaming protocols

In contrast to WebRTC, which is a peer to peer protocol that, in theory does not require a central server to establish communication between two machines, HTTP-based streaming protocols require the use of a server and communication over standard HTTP. They are based on the very foundations of the web.

HLS/LL-HLS

The HTTP Live Streaming (HLS) protocol can be used to deliver live and on-demand content streams to global-scale audiences and was introduced by Apple in 2009 with scalability in mind rather than latency. While the average latency faced by users is around 15s, the HLS latency can reach between 30s and 1 minute. Even with performant infrastructure and optimized packaging and player configuration, one can expect latencies of around 6s, which is just too high for real-time live video use cases. However it's still the most popular ABR streaming protocol. Its success is mainly due to its great compatibility with a wide range of devices and browsers and supports advanced features like closed captions, ads, and content protection with AES encryption or DRM.

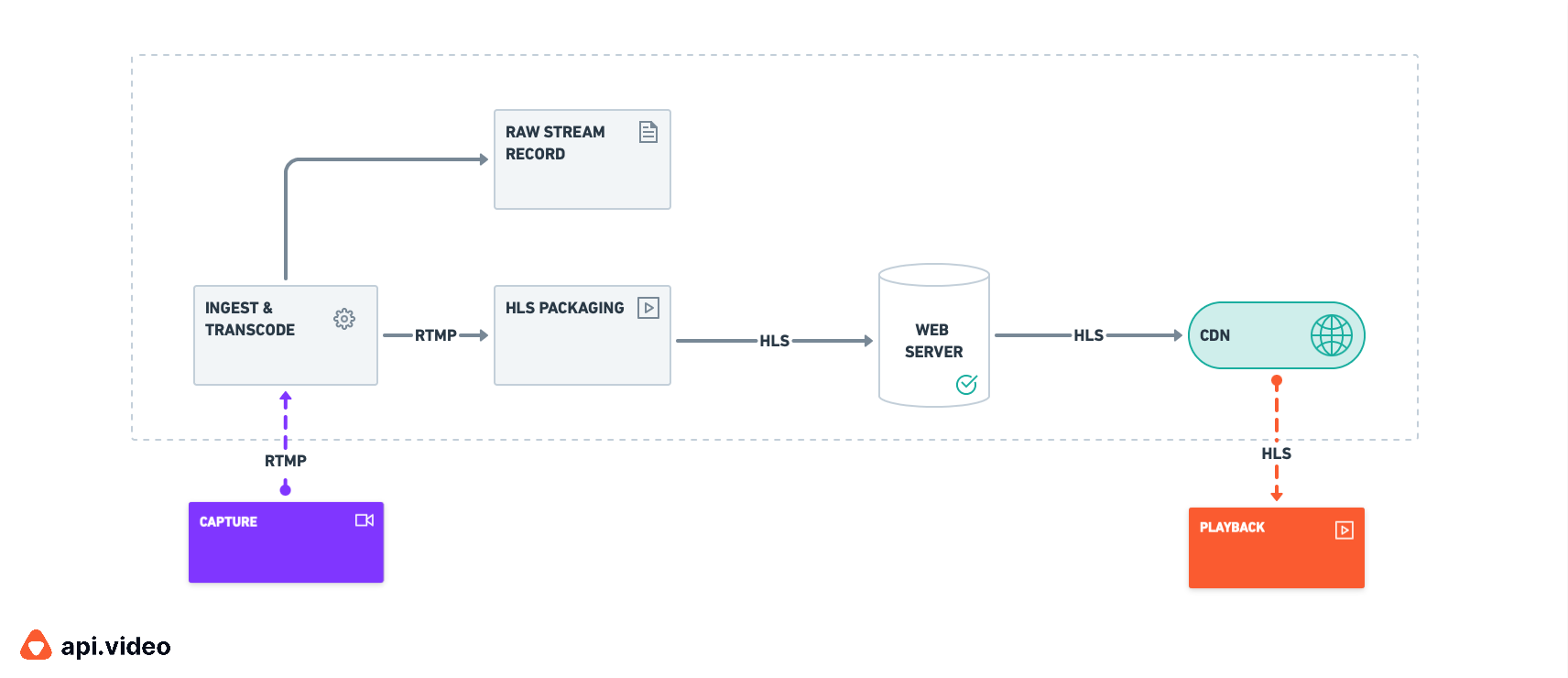

Although the protocol can also be used to ingest video, it's normally only used for distribution and then paired with the RTMP protocol for ingestion. Below is a typical live streaming architecture involving both the RTMP and HLS protocol.

RTMP/HLS live streaming workflow

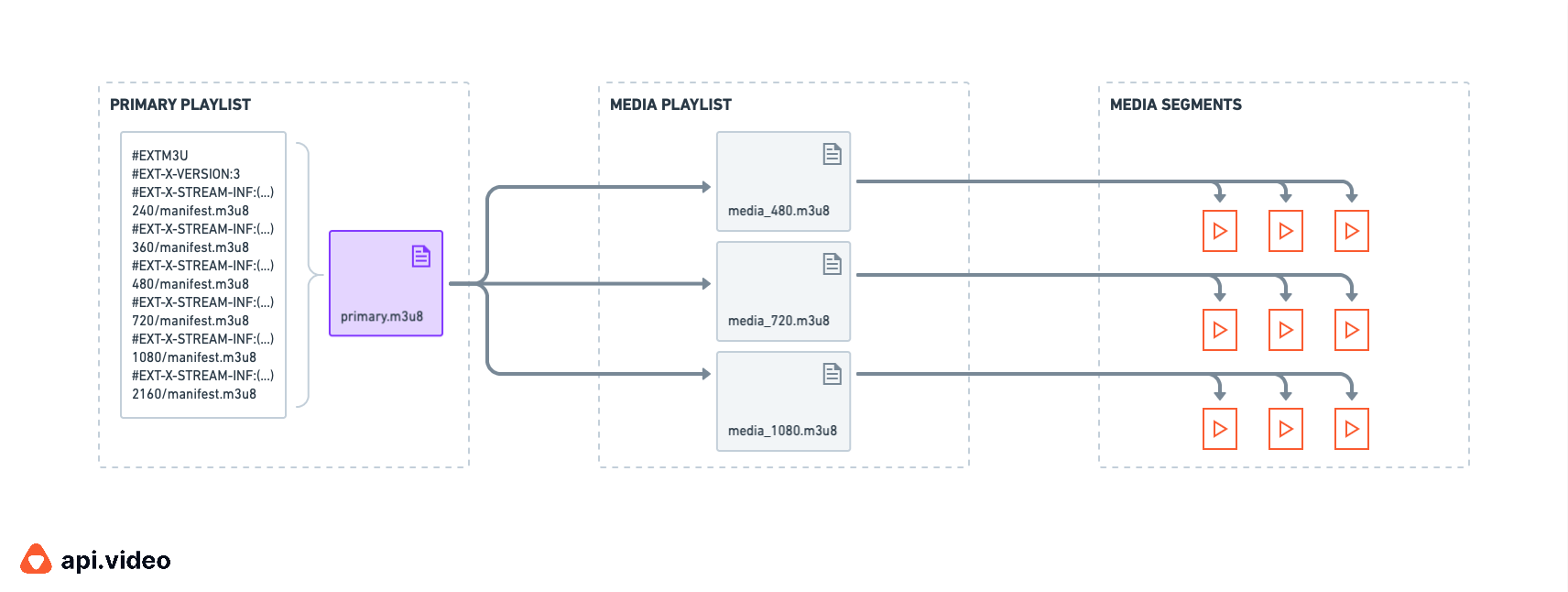

Without digging into the details of how HLS works (see our blog articles to learn more on this topic), below is a simple schema describing how playlists and media segmentation gives HLS the ability to do adaptive bitrate streaming (ABS).

Diagram of an HLS playlist and segmentation

So how did HLS evolve to support lower latencies? In the beginning, HLS only worked with MPEG-TS container formats associated with the H.264/AVC video codec. In 2016, support for fragmented MP4 (FMP4) was added and this led to support for the CMAF format and compatibility with DASH distribution. One year later, HLS added support for H.265/HEVC (FMP4 only), which then allowed for a significant reduction in bandwidth usage.

Thus, Low-Latency HLS, an extension of the base HLS protocol, was finalized in April 2020 and enables low-latency video and live streaming by utilizing partial segments and on-the-fly transfer of those segments while maintaining HLS's inherent scalability. On the first draft of this extension, the protocol required the support of "HTTP/2 Push" which made it difficult to implement. Unsurprisingly, as major CDN vendors chose not to support this feature, killing the ability to scale LL-HLS, as none of the CDN players could cache the contents. Fortunately, Apple listened to community and industry concerns and came back with a new revision of the protocol extension, that removed the need for "HTTP/2 Push," but kept the need of relying on the "HTTP/2" protocol. They merged the spec into HLS making it fully backward compatible with the HLS specification and that also means that all features covered by HLS can be retrieved in LL-HLS.

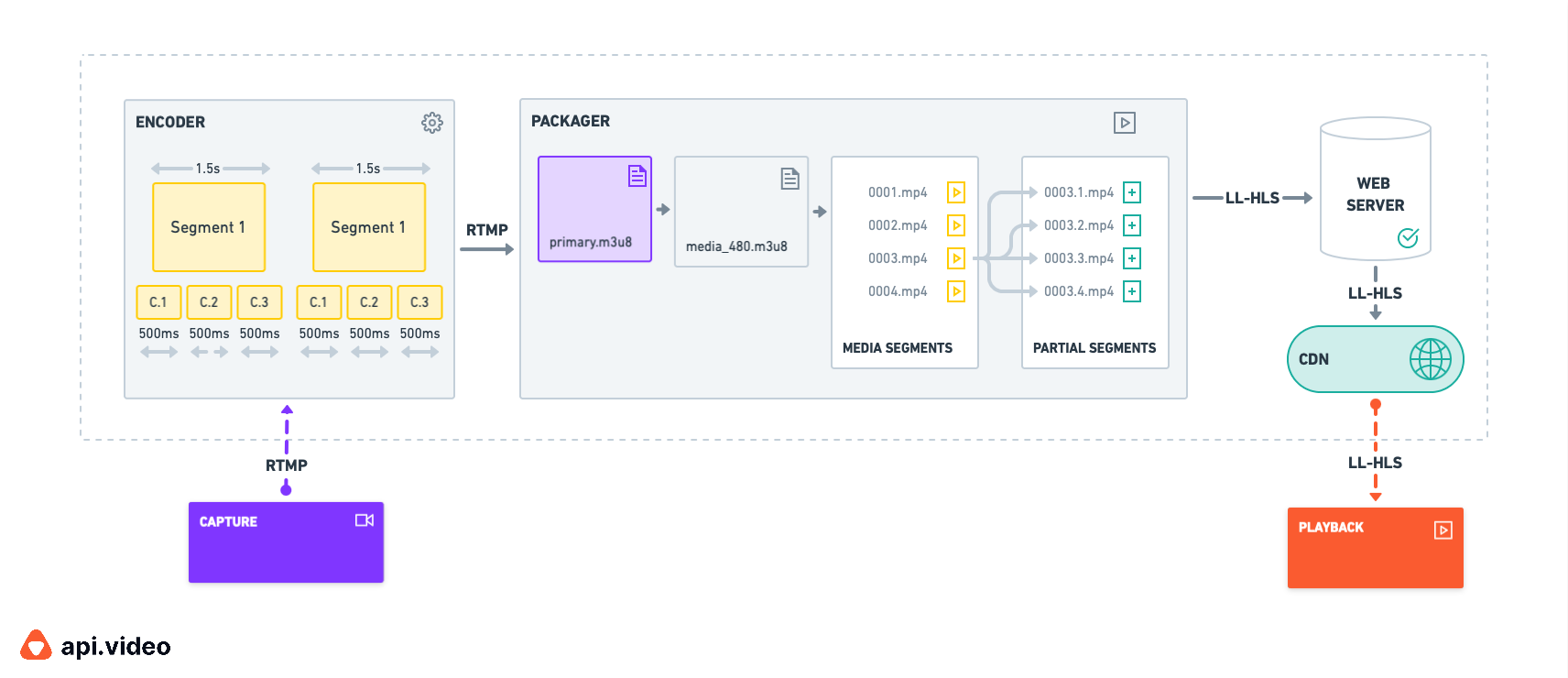

LL-HLS works practically the same way as HLS, but makes some important changes to reduce the latency in the packaging process. Below are the most important updates to the specification which make low-latency possible while preserving scalability and ability to do ABR streaming:

- Partial Segments: A segment is divided into Partial Segments or "Parts" of a few milliseconds which are referenced in the Media Playlist. These have a very short cache lifetime in contrast to the parent segment which can have a longer lifespan on the CDN. Once a complete segment is generated, its associated Partial Segments can be removed from the media playlist to reduce the bandwidth footprint.

- Preload hints: The media playlist can have a “Preload Hints” tag, which allows the player to know that new partial segments are coming so that the player can start to request them and the server will respond to this request as soon as the data is available.

- Block Playlist Reload: This feature avoids media playlist polling between the player and the server by adding query parameters to the request which inform the server that the player wants the response only when the playlist includes a new segment or partial segment.

- Playlist Delta Updates: Thanks to the new "EXT-X-SKIP" tag, a player can request just the updated part of the Media Playlist and save the transfer cost of the data that it already has.

- Rendition Reports: The "EXT-X-RENDITION-REPORT" tag added for each rendition line indexed in the Primary Manifest (aka: Master Manifest) provides some useful information for the Player to operate ABR such as the last sequence number and the last partial segment available for each rendition.

LL-HLS workflow media segmentation

With current public cloud providers you can expect to reach an end-to-end latency in the range of 2s with appropriate Group of Pictures , partial fragments, and reasonable buffer size. While this is still much slower than what WebRTC can achieve, it involves significantly less complexity and is easier to implement in your existing live streaming architecture. You can also push the protocol to its limits by changing some settings and achieve a latency of around 1s, but this comes at the expense of stability and the quality of the playback experience.

DASH/LL-DASH

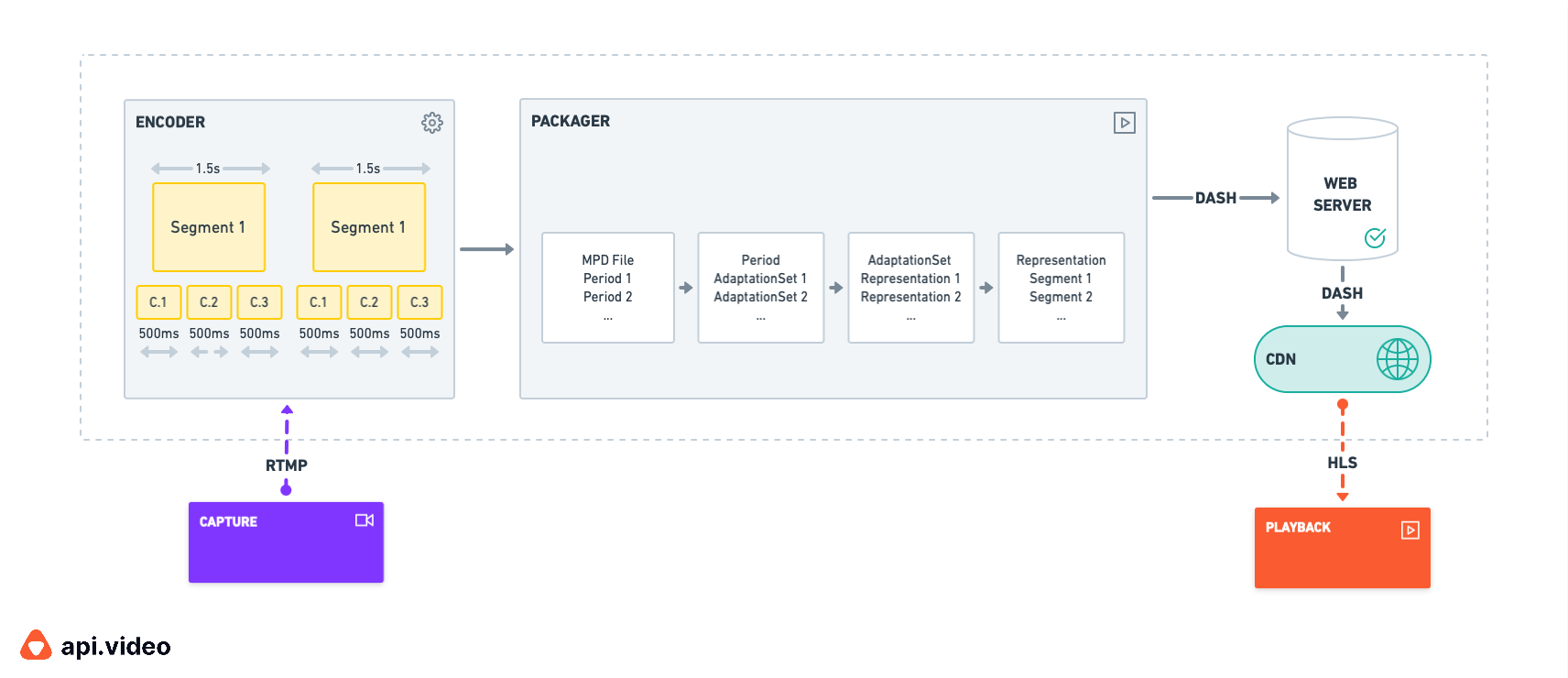

DASH stands for Dynamic Adaptive Streaming over HTTP and was born in April 2012 by the work of the Moving Picture Experts Group (MPEG) in order to create an industry-standard alternative to the HLS protocol owned by Apple. It works similar to HLS in that it's based on the preparation of content at different quality levels and divides each video quality into several chunks indexed in manifest files to enable ABR streaming.

It also supports content protection with encryption through Common Encryption standard (CENC) which makes it compatible with all common DRM systems.

The main difference between LL-HLS is that LL-DASH is completely agnostic of the codecs used for encoding video and audio streams. Unfortunately this codec freedom comes with the cost that LL-DASH is not compatible with Apple devices relying on iOS, including Apple TV.

In 2017, LL-DASH provided the necessary changes to the standardized protocol to decrease latency to 2s. Behind the scenes, it relied on the Common Media Application Format (CMAF). CMAF offers the ability to play with some useful HTTP features that can be used to decrease latency a lot. The two features that allow Low Latency for DASH with CMAF are "Chunked Encoding" and "Chunked Transfer Encoding" — both part of the HTTP/1.1 spec (prohibited in HTTP/2) that respectively allow you to break a segment into smaller chunks of a few milliseconds that can be sent to the distribution layer once encoded and this is where the "Chunked Transfer Encoding" feature take the relay to allow to distribute encoded chunks on the fly. However to operate this kind of delivery process, the whole distribution stack has to support the chunked transfer encoding feature from the origin right through to the CDN.

Diagram of LL-HLS workflow and media segmentation

HESP

The High Efficiency Stream Protocol (HESP) is another ABR HTTP based streaming protocol and is the most recent one of all. It was created by THEOPlayer through the HESP Alliance, whose main mission is to work on the standardization of the protocol and its promotion.

HESP has been developed with the aim of solving several of the main constraints of other HTTP streaming protocols. It's goals are:

- To reach ultra low latency(<500ms) while preserving scalability (i.e. it can still work with major CDN vendors)

- To reduce the bandwidth required during the streaming

- To reduce zapping times (zapping is the time it takes to switch between streams, think of it as changing the channels on cable television)

These three performance metrics have a direct impact on the user experience of the audience of a live stream. Given the extremely low latency, it can also be a viable alternative to WebRTC. The main drawback of HESP is that it's a proprietary commercial protocol and–for commercial use–it's quite expensive. Let's dig a bit into a technical overview of how it works.

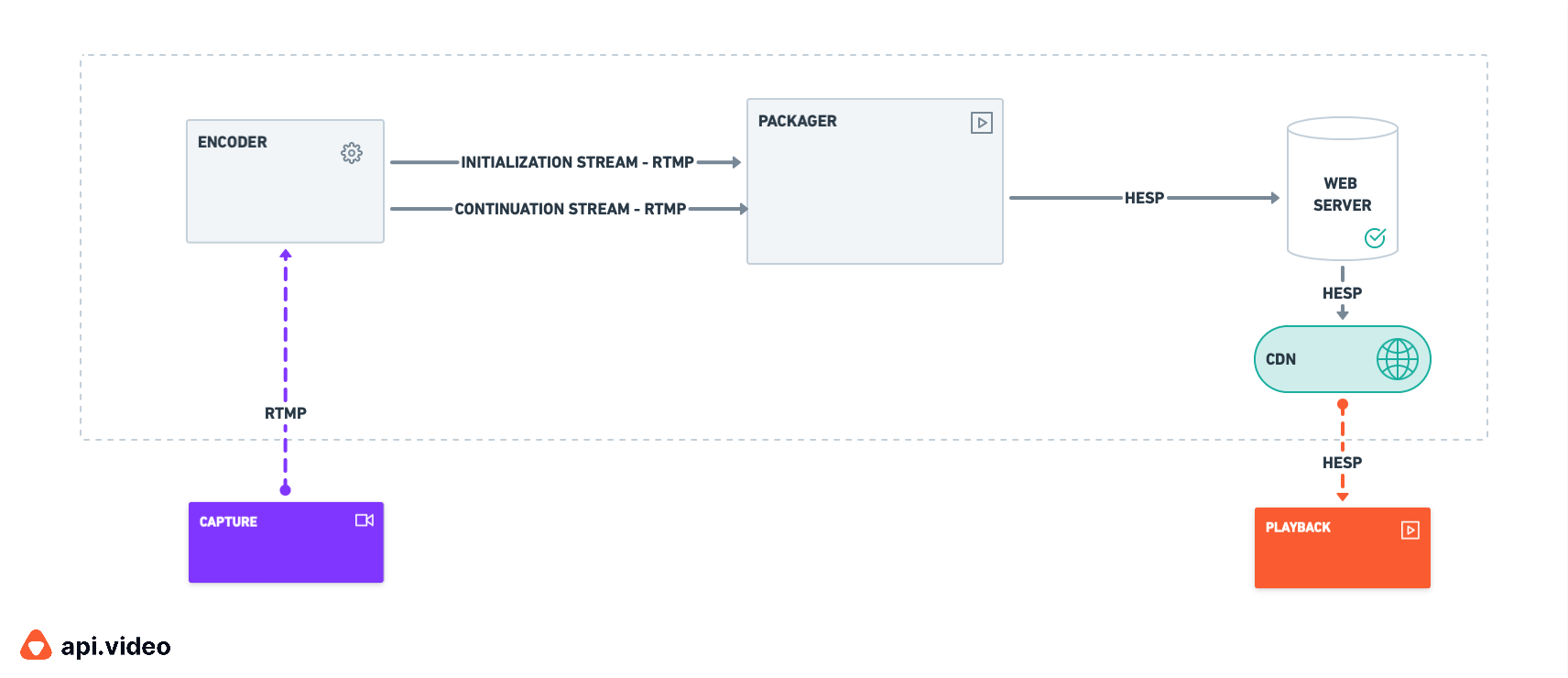

The main difference with HESP in comparison to other low-latency protocols is that it relies on two streams rather than one. Before we go into the details of how these streams allow us to reach sub-second of latency, let's talk a bit about the different types of frames that are required when streaming video:

In video compression there are several types of frames:

- IDR-Frame (aka: Key Frame)

- I-Frame

- P-Frame

- B-Frame

Let's start with the I-Frame, you will better understand the others. An I-Frame contains the whole image and is coded without reference to any other frame except itself.

The key frame (or IDR-Frame) is a special I-Frame where no frame after the IDR frame can reference any frame before it. That being said, all IDR-Frames are I-Frames but not the other way around. The key frame is used by any player to be able to start a playback.

The P-Frame holds only the changes in the image from the previous one.

The B-Frame holds the changes between the current frame and both the preceding and following frames.

Now that you know the role of the different kinds of frames composing a video, we can go back to the streams that compose the HESP protocol:

- The first stream is called the "Initialization Stream" and contains only key frames.

- The second one is called the "Continuation Stream" and is like a normal encoded stream, meaning that it can contain all types of frames depending on the encoding parameters and defined profiles for maximum performance or maximum compatibility.

The Initialization Stream is used only for the start of the playback or when you slide on the video timeline to change the position of the playback. Because this stream contains only key frames, the decoder behind the player is able to instantly decode the frame and so start(or restart) the playback of the live event. Once the first frame is pulled from the first stream and decoded, the player switches automatically to the second stream to continue video playback. This is because key frames are complete images, so they have a high cost in terms of bandwidth. By switching to the second stream, the player falls back to a regular live streaming bandwidth footprint and profits from the CDN capability for scaling the audience and reducing the load to the origin.

As for DASH/LL-DASH, HESP works also with CMAF-CTE for packaging and distribution. It inherits from all the features such as encryption, DRM support, subtitles and captions, advertisements, and more.

HESP Workflow

This protocol sounds revolutionary on paper, right? It does respond to many issues, it's true. But as with other protocols–and everything else in life–nothing is perfect and therefore it brings its own set of drawbacks and limitations.

The first drawback is that, as the protocol works with two streams which are encoded in sync, the encoding and storage cost is higher than the other HTTP based streaming protocols.

The second drawback is that even if it relies on CMAF-CTE packaging and distribution, the packager has to be updated to handle the two streams instead of one in the encoding and distribution steps.

Finally, as with the packager, the player must also be updated in order to be able to play with the HESP protocol and handle all of its subtleties.

And finally, last but not least, you have to pay royalties for any commercial use which inherently limits its adoption.

So which protocol is the best?

The quick answer is none. What a helpful answer for making a choice, right?

The more complete answer is that it will depend heavily on the use case that you want to cover and the time and resources (both financial and human) you have at your disposal.

If your needs is to offer to your customers and audience the ability to live stream with a reasonable amount of latency (6s-15s), while remaining cost effective , we recommend you use HLS and/or DASH protocols as they can scale easily to millions of viewers. You can find many open-source libraries and streaming platforms that have implemented them very well. Our preferred choice, is HLS, as it has the largest footprint in terms of adoption and support across browsers and devices. It can be played virtually anywhere. (If you want to test it, you can try our live streaming service for free just by creating an account here).

In the case that latency is critical for your business, you should look at low latency or even ultra low latency protocols. If you only needs mono-directional live streaming capability with a latency in the range of ~2s (e.g. for sport events, concerts, e-learning with students listening a teacher and can ask questions in a chat) and you don't have a big budget, you should look at LL-HLS and/or LL-DASH protocols.

For the remaining use cases where more than 1s of latency is not acceptable, you don't have many options left. It's either going to be WebRTC or HESP.

If you're a non-profit organization but need to serve a large audience or just don't want to have to build super complex infrastructure and don't have a lot of cash in your pocket, take a look at the HESP protocol.

In case you need bi-directional video communication, WebRTC has proven its ability to do it well, but if building an in-house infrastructure isn't part of your core business, you'll probably want to rely on a provider who already successfully built such an infrastructure in a scalable fashion. Be aware that the likelihood of having to pay a high cost is quite high. Since HESP Alliance's royalty fees are client-based and offer different packages to meet the fees, if you have a reasonable audience this can also be a good option for limited budget.

Finally, if money is not a concern, our choice would be HESP because it involves much less work compared to an implementation of WebRTC and is compatible with a wide range of devices and of browsers.

At api.video we deeply believe that HTTP-based Low or Ultra Low Latency streaming protocols will win this battle in the end. The reasons are quite simple, these protocols involve much less work to be implemented in a legacy infrastructure, profit from more support across devices and browsers, can scale more easily than WebRTC, and–as they don't have prohibitive licensing fees–they can be adopted by a larger number of companies.

This is why we're building end-to-end Edge video infrastructure based on HLS. We're confident that, in the end, HLS will be the single streaming protocol that will meet all video streaming use cases in the near future.

LATEST ARTICLES

Video trends · 9 min read

What are closed captions in videos and how are they different from subtitles

Discover the key differences between closed captions and subtitles. Learn how they're created, when to use each, and their impact on accessibility and user experience.

Arushi Gupta · October 3, 2024

Video trends · 7 min read

MKV vs. MP4: Understanding the differences

Explore the differences between MKV and MP4 in this detailed guide. Learn which format is ideal for streaming and the shortcomings of each.

Arushi Gupta · September 24, 2024

Video trends · 6 min read

Why low latency matters: Explained with 6 real-world examples

Discover why low latency is crucial in live streaming with real-world examples. From sports events to financial trading, understand how minimal delay enhances viewer engagement.

Arushi Gupta · August 23, 2024

Try out more than 80 features for free

Access all the features for as long as you need.

No commitment or credit card required

Video API, simplified

Fully customizable API to manage everything video. From encoding to delivery, in minutes.

Video API, simplified

Fully customizable API to manage everything video. From encoding to delivery, in minutes.

Built for Speed

The fastest video encoding platform. Serve your users globally with 140+ points of presence.

Built for Speed

The fastest video encoding platform. Serve your users globally with 140+ points of presence.

Let end-users upload videos

Finally, an API that allows your end-users to upload videos and start live streams in a few clicks.

Let end-users upload videos

Finally, an API that allows your end-users to upload videos and start live streams in a few clicks.

Affordable

Volume discounts and usage-based pricing to ensure you don’t exceed your budget.

Affordable

Volume discounts and usage-based pricing to ensure you don’t exceed your budget.