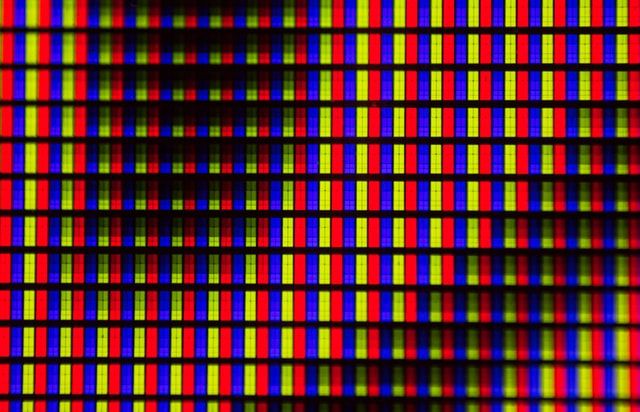

Pretty much all digital display devices today are based on some type of RGB (Red, Green, Blue) color model. RGB is the most efficient way to represent most colors that humans can see (there are a few colors it doesn't produce very well, but that's another discussion). Cameras, cellphones, televisions, computer screens and digital video all display color using RGB color models and RGB color spaces. You may be surprised to hear this, after all, as a kid didn't you get taught the RYB (Red, Yellow, Blue) color model?

Why is the RYB color model not used?

While you probably got taught the RYB color model, RYB isn't used for a couple of reasons. First, it's not as effective at creating all the colors humans see. You can get more colors out of RGB. This is actually why we don't use other random color models. You could, for example, have a color model that's purple, blue and pink. You won't be able to make very many colors with it compared to RYB or RGB, but you can still do this if you wanted to. The second reason we don't use RYB is because the human eye is more sensitive to green. You are most sensitive to green, then to red, and a tiny bit to blue.

Why do we use RGB more than other color models?

Because these are the colors the human eye notices most, the RGB color model is used most often to create different color spaces. A color space is a combination of a color model and a way of interpreting or mapping those colors to what you'll see. There are many RGB-based color spaces. one common way of interpreting the RGB color model is to include an extra channel - this style of color space is called RGBa. It's one channel for each color - red, blue and green, then an extra channel that describes the transparency of each pixel in each channel.

RGB based color spaces that stick with an RGB representation create very high quality representations of the images and video. They're often used to generate computer graphics and capture video. There's just one little problem - RGB is not good for image processing or storage. As I mentioned a bit earlier, the human eye sees green best. This is partially because the human eye is most sensitive to luma, or how bright something is. Green is mixed in with brightness in human vision. Unfortunately, with RGB, brightness is mixed in to all the color channels, and there isn't an easy way to separate it out. So most of the time, you'll find an RGB colorspace applied to still images or very high end computer animation. For video, another, more compressable solution is needed.

Y'CbCr and YUV

Sometimes Y'CbCr and YUV are used interchangeably, which isn't accurate. YUV is for analog TV, and Y'CbCr is for digital video, but they're both using the same concept to achieve color compression.

For this color space, based on the RGB color model, the way the human eye perceives chroma (color) and luma (brightness) is capitalized on. Because you see luma better than color, the channels for color information are split out like this:

- Cb - blue minus luminance

- Cr - red minus luminance

- Y' - luminance

If you asked where the green is, you're not alone, that's a popular question. Because you see green as part of how you perceive luma, it's included in the Y' channel. Luminance is also accounted for in the other channels where you're subtracting it out. The benefit of this way of interpreting the RGB color model, is you can gather what your eye sees best into the luminance channel, leaving room to compress color in the other two channels. This technique can be used to make it easier to transmit video and to store video. The color compression is called chroma subsampling.

Chroma Subsampling in Y'CbCr and YUV

Because you can separate out luminance, in the remaining channels for red and blue you can use chroma subsampling to to compress the data. Chroma subsampling uses the notation x:y:z.

- x tells you how many luma pixels you're going to take in a sample. So if you had 2:y:z, you're sampling two pixels at a time. 4 is most popular, so you're more likely to see 4:y:z.

- y tells you how many chroma pixels to sample out of the first line of the data, and then every alternating line thereafter. So say you have 4:4:z. This would mean you're taking a luma sample of 4 pixels, so you go to the frame of your video (or your image) and in the first line, you're also taking a chroma sample of 4 pixels. So you didn't throw any data away.

- z tells you how many chroma pixels to sample out of the line of pixels after y. So let's say it's 4 again. You would sample 4 chroma pixels. The complete notation would be 4:4:4.

Now for the rest of the image, you would sample in blocks of 4 luma by 2 rows, with y being the first row, and z being the second, until everything was subsampled.

- 4:4:4 is rare, because its saving all the data, this is most often used for internal processing and otherwise not much. Sometimes this is called YUV-9 sampling, but that's when it's used with analog video

- 4:2:2 you're chucking half the color data per line. This might sound like a lot, but this is the subsampling method used for professional video authoring. It's also frequently used to capture video.

- 4:2:0 is used in many delivery codecs for broadcast, DVD/Blu-ray, PAL DV, HDV, AVCHD and the web. You may have seen it as YV12.

- 4:1:1 is used by NTSC DV25 formats like DV. This is a great format if you are capturing using interlaced video.

Resources

About api.video

api.video enables you to quickly and securely deliver on-demand and live-stream videos directly from your website, software, or app. Sign-up for a free sandbox account to try out api.video’s video API, or talk to our team to get a demo.