HLS Video Streaming

HTTP Live Streaming (HLS) is the current 'de facto' streaming standard on the web. The actual web standard is MPEG-DASH, but HLS has gained widespread use, making it the predominant streaming solution in use today. When you play a video hosted at api.video - you are watching a HLS video stream.

In this post, we'll walk through what HLS streaming is, and how it can be used to stream video, including streaming multiple video sizes and bitrates.

the <video> tag

HTML5 natively supports video with the <video> tag. The usage generally looks like:

```

<video {various attributes like autoplay poster, etc}>

<source src= "video.webm">

<source src = "video.mp4">

</video>

```

In the video tag, you set your attributes, and then link to the videos in the source tag. If you have multiple source tags, the browser chooses the first format it understands.

When the browser sees a video file (or the user presses play), it begins the download. There is only one version of the video being downloaded - no matter the screen size.

When the video source is larger than the screen, the phone will have to remove pixels to correctly display the video - using extra CPU and battery resources. If the video bitrate is higher than the available network connection - the video will be unable to play smoothly, and there will be stalls during the playback.

Another point to consider for longer videos: if a user seeks ahead in the video, the video will only start playing when every byte before the selected location is played.

A Video Stream

At its simplest, a video stream is created by breaking the video into smaller segments (or chunks) with extension .ts (they are often numbered to make them human readable). The order and naming of these segments are stored in a manifest file (typically with extension .m3u8)

Here is a manifest file from api.video:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-ALLOW-CACHE:NO

#EXT-X-TARGETDURATION:4

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-KEY:METHOD=AES-128,URI="aes.key",IV=0x1846cb99f30c689d11f79b110ce16cf7

#EXTINF:4.000000,

0000.ts

#EXTINF:4.000000,

0001.ts

#EXTINF:4.000000,

0002.ts

#EXTINF:4.000000,

0003.ts

#EXTINF:4.000000,

0004.ts

#EXTINF:4.000000,

0005.ts

#EXTINF:4.000000,

0006.ts

#EXTINF:1.800000,

0007.ts

#EXT-X-ENDLIST

The first 7 lines are header lines, describing the file. The TARGETDURATION says that each segment should be 4 seconds, and the PLAYLIST-TYPE indicates VOD, meaning video on demand.

Each segment is listed with the EXTINF 4.0000, indicating the length of the segment, followed by the filename.

Skipping ahead

As mentioned earlier - skipping ahead in an mp4 requires all video before the new point to be downloaded. If you skip to 1hour ahead in the movie - the player needs to download 60 min of video.

If you wish to "seek ahead" with a video stream, the player can calculate which segment to request next. The example above is short, but if you were to seek to t=22, the player can quickly calculate that it would be in 0006.ts, and the player could skip any .ts 'in between' that are not yet downloaded.

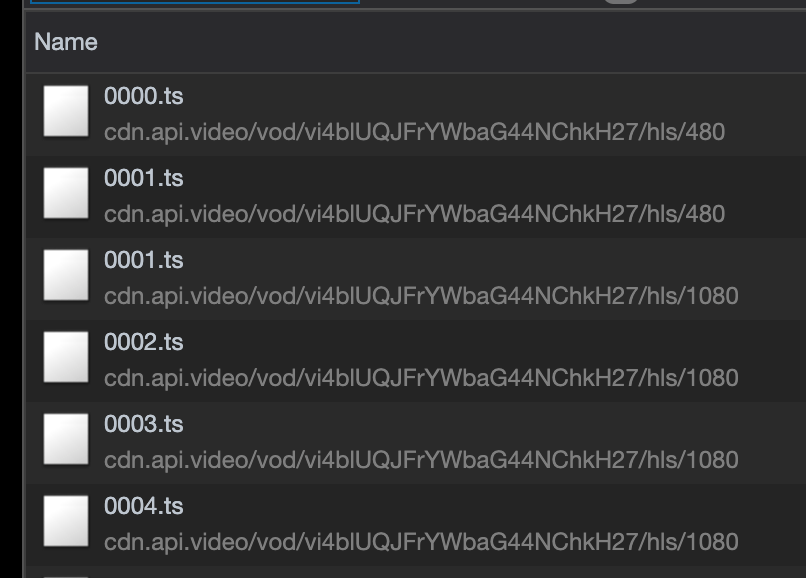

Multiple Video Streams

In reality, it is rare to just have one video stream inside your HLS video. You often have many different versions of the same video. Here at api.video, each video is encoded at 240p, 360p, 480p, 720p, 1080p, and 2180p (up to the size of the original video).

Each of these formats will have a manifest file as above. But how do you differentiate between each version of the video?

The Master Manifest

The first file of the video delivered to the video player is the master manifest. It is the 'menu' that lists all of the streams available to consume:

#EXTM3U

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=275000,RESOLUTION=134x240,CODECS="avc1.66.30,mp4a.40.2"

240/manifest.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=880000,RESOLUTION=202x360,CODECS="avc1.66.30,mp4a.40.2"

360/manifest.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=2860000,RESOLUTION=404x720,CODECS="avc1.66.30,mp4a.40.2"

720/manifest.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=1540000,RESOLUTION=270x480,CODECS="avc1.66.30,mp4a.40.2"

480/manifest.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=4840000,RESOLUTION=608x1080,CODECS="avc1.66.30,mp4a.40.2"

1080/manifest.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=17600000,RESOLUTION=1080x1920,CODECS="avc1.66.30,mp4a.40.2"

2160/manifest.m3u8

Each version of stream gets its own entry, and they are typically ordered from lowest quality to highest. Let's look at the last 2 lines - defining the 4K stream:

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=17600000,RESOLUTION=1080x1920,CODECS="avc1.66.30,mp4a.40.2"

2160/manifest.m3u8

The definitions include the bandwidth required to play the video (17.6 MBPS), the resolution 1080x1920, and the video codec (avc1) and the audio codec (mp4).

The second line is the link to the manifest for that stream that will list each segment's length and location.

Player Power

The details of this 'master manifest' gives the video player a lot of power. With the <video> tag, there is one 1080p mp4 that will play: poor network conditions, small screen - doesn't matter.

The player, being on the local device, knows the available screen size - so can request the proper size for the screen - eliminating transport data wastage. Never again will a device get too large a video, and have to resize the video on the fly.

The player can also track the network throughput by calculating how quickly each segment is downloaded. If the video playing is 1080p (4.8 MBPS), but all of a sudden, the network speed drops to 3.0 MBPS, the player can quickly shift to the 720p stream, which at 2.86 MBPS will still be able to play.

Video Startup

Have you ever watched a movie on Netflix, and noticed that the first few seconds had very low quality? When a stream begins, the player has no idea of the available bandwidth, so to ensure fast delivery, it often starts with the lowest resolution (240p 275KBPS). There are 2 things going on here:

1. really fast startup time. Most connections can handle 275 KBPS, so we know the video will play. For most connections, 275 KBPS will download VERY quickly - which means that the video will start quickly.

2. Math. after 2 segments are downloaded - the player knows the network speed, and can jump to 1080p (or what the device can support).

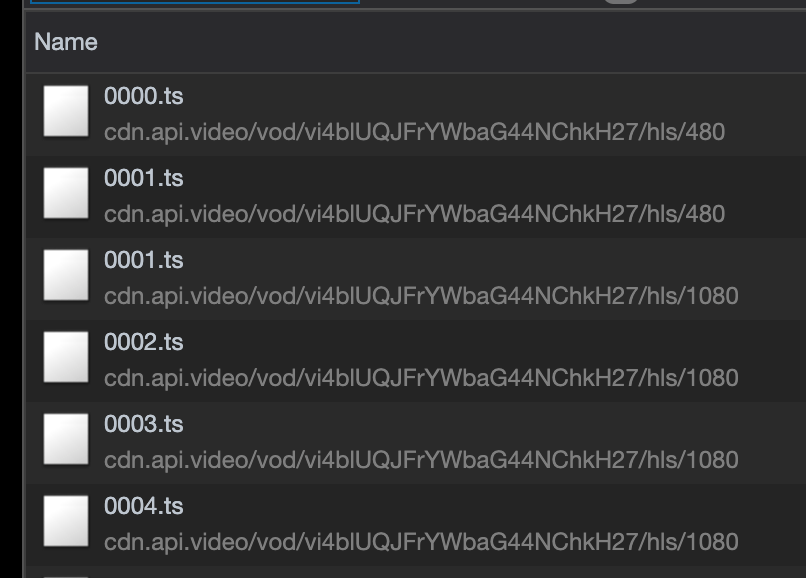

In the image above, we see that 0000.ts and 0001.ts were downloaded at 480p, but from 0001.ts forward, we are at 1080p.

It's likely that the 2nd segment 0001.ts plays as 1080p, meaning that just 4 seconds is of lower quality. But it was delivered quickly, and quickly changed to a higher quality - making the overall experience for the end user a positive one.

So the end user sees a fast video startup (maybe a bit blurry), but the video quickly improves to a crisp video.

What's next for HLS?

For live streaming, api.video shortens each segment to 2s. That way, if the player requires 2 segments to be buffered before playback, the delay is just 4s, not 8.

Recently, Apple has released specifications for Low Latency HLS (LL-HLS):

Now each segment can be broken into 'parts':

#EXTM3U

# This Playlist is a response to: GET https://example.com/2M/waitForMSN.php?_HLS_msn=273&_HLS_part=2

#EXT-X-TARGETDURATION:4

#EXT-X-VERSION:6

#EXT-X-SERVER-CONTROL:CAN-BLOCK-RELOAD=YES,PART-HOLD-BACK=1.0,CAN-SKIP-UNTIL=12.0

#EXT-X-PART-INF:PART-TARGET=0.33334

#EXT-X-MEDIA-SEQUENCE:266

#EXT-X-PROGRAM-DATE-TIME:2019-02-14T02:13:36.106Z

#EXT-X-MAP:URI="init.mp4"

#EXTINF:4.00008,

fileSequence266.mp4

#EXTINF:4.00008,

fileSequence267.mp4

#EXTINF:4.00008,

fileSequence268.mp4

#EXTINF:4.00008,

fileSequence269.mp4

#EXTINF:4.00008,

fileSequence270.mp4

#EXT-X-PART:DURATION=0.33334,URI="filePart271.0.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.1.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.2.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.3.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.4.mp4",INDEPENDENT=YES

#EXT-X-PART:DURATION=0.33334,URI="filePart271.5.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.6.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.7.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.8.mp4",INDEPENDENT=YES

#EXT-X-PART:DURATION=0.33334,URI="filePart271.9.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.10.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart271.11.mp4"

#EXTINF:4.00008,

fileSequence271.mp4

#EXT-X-PROGRAM-DATE-TIME:2019-02-14T02:14:00.106Z

#EXT-X-PART:DURATION=0.33334,URI="filePart272.a.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.b.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.c.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.d.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.e.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.f.mp4",INDEPENDENT=YES

#EXT-X-PART:DURATION=0.33334,URI="filePart272.g.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.h.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.i.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.j.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.k.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart272.l.mp4"

#EXTINF:4.00008,

fileSequence272.mp4

#EXT-X-PART:DURATION=0.33334,URI="filePart273.0.mp4",INDEPENDENT=YES

#EXT-X-PART:DURATION=0.33334,URI="filePart273.1.mp4"

#EXT-X-PART:DURATION=0.33334,URI="filePart273.2.mp4"

#EXT-X-PRELOAD-HINT:TYPE=PART,URI="filePart273.3.mp4"

As the first comment line says, 'This Playlist is a response to: GET https://example.com/2M/waitForMSN.php?_HLS_msn=273&_HLS_part=2'.

Segments 270, 271 and 272 are 4s long, but broken into 12 'parts' of 1/3 second each. The request was for segment 273 part 2... But, alink is presented for 273 part3, this is introduced as a PRELOAD-HINT - meaning - "hey this is not ready yet, but we know its coming, so you can request it when you need it"

The segments 266 - 271 are requested in case the viewer would like to 'rewind' a few seconds- the player has access to about 15s of video 'in the past.'

Conclusion

Live streaming has a number of advantages over the HTML5 native <video> tag. Responsive by default, it ensures that less data is wasted by serving 'too large' video to a small screen. It is also adaptive - so fluctuations of network speed are less likely to impact playback.

api.video uses HLS under the hood. Every video creation response includes the m3u8 url for the stream (if you are using a different player), but our player link, and iframe embed link are using HLS under the hood.

Are you curious to see your video as a stream? Create an account and test in our developer's sandbox for free!