Here at api.video, we talk with lots of developers who are looking for ways to build cool and innovative services with video. Many are still figuring out all of their use cases, and trying to match them with the technical capabilities of different video services.

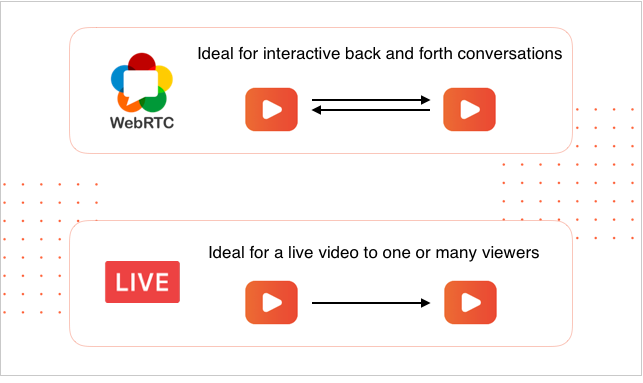

Streaming live video is one feature that we receive many questions about. At api.video, our video streaming solution uses HTTP Live Streaming (HLS) to live stream video. Another technology we are frequently asked about is WebRTC. Both technologies are great, but have significantly different applications. In this post, we’ll look at the features and advantages of both, and the primary applications that they are suited for.

What is HLS?

HTTP Live Streaming (HLS) was developed by Apple, and is currently the primary way that video is streamed on the web today. The “live streaming” in the name HLS is a bit of a misnomer, as the video does not need to be live - YouTube, Netflix and other video on demand services use HLS to stream video that is not live. This is an advantage of using HLS for live streaming - the same files used to deliver the video live can be used to playback the video later ‘on demand.’

The ‘HTTP’ in HLS indicates that it uses traditional web protocols to transmit the data over a TCP connection. The video is cut into small segments and sent from the server to the user. The player on the user’s device recombines the video for playback based on a text based manifest file that is transmitted with the video segments. What makes this powerful is that if you have different versions of the same video (different dimensions and bitrates), the player can optimise the delivery based on the actual conditions for each user (primarily network speed and screen size).

When a live video is being transmitted with HLS, the video is sent to a server and transcoded into segments for transmission to end users. This makes HLS ideal for live streaming in one-to-many streams - once the files are recorded, the files are sent like any other HTTP request and the limitation is essentially the server hosting the video. (and of course with CDNs this overhead can be further reduced).

Latency: HLS streaming does suffer from about 6-15 seconds of latency (and depending on architectures, it can even be longer). Since the video is transcoded into segments of finite length, the video must be held up for at least as long as the segment length. To reduce transmission errors, some players require 2 segments to be stored in the playback buffer before playback begins, further pushing playback time away from the exact live event.

NOTE: There is active work being done today to reduce HLS streaming latency, and this is expected to improve over the coming months (and api.video is investing in bringing low latency HLS to our users in the very near future).

HLS Summary: A robust solution for streaming to many users. The current state of HLS includes 10-20 seconds latency that makes HLS not ideal for interactive presentations. HLS videos are also available for playback after the live event.

WebRTC

“Web Real Time Communication” or WebRTC is a W3C standard that is supported in all browsers, allowing for - as you might have guessed - real time communication. WebRTC has near instantaneous delivery, typically under 300ms, making it ideal for interactive back and forth conversations like in web conferencing solutions.

The reduction in latency is (partly) due to the use of UDP instead of TCP, and because the formats being used do not require any transcoding before transmitting.

However, since there is instantaneous delivery of video between peers, there is a limit to the number of users who can share video, typically under 6. There are implementations for WebRTC where a small number of users (under 5) share their video, and another number of users can watch in real time (but this number is usually small - under 300.).

Each person sharing video uses about 1 MBPS of bandwidth. If there are 4 people streaming, the video is 4 MBPS per viewer - the bandwidth coming out of the server will quickly be the limiting factor for the number of users who may join the stream. Additionally, users on low quality networks may not be able to ingest 4MBPS of video, and have trouble playing back the video due to the size.

In order to save the video for later playback, the WebRTC implementation will use the MediaRecorder attribute to save the video on a local computer. For later playback, this video will have to be uploaded to a server.

WebRTC is ideal for real-time instantaneous communication, but is for small groups of viewers - typically 1-4 sharing video, and a small number of viewers.

Are HLS and WebRTC independent?

Melding HLS and WebRTC is actually quite common. For example, if you are hosting a panel of speakers, they could join over WebRTC (like a Zoom call), and then stream the call over HLS to a large audience (Zoom offers this as a feature to increase your audience).

How are you using WebRTC and HLS in your video applications? Feel free to continue this conversation on our developer forum: community.api.video.